Network Interfaces

Network Interfaces

- Overview

- Network Interface Configlets

- PCAP

- TPACKETv3

- NFQUEUE

- Berkley Packet Filters (BPF)

- Next Steps

Overview

The Netify Agent is typically installed in physical or virtual hosts within a network environment to capture and analyze traffic in near real time. Two modes of capture exist:

Gateway Mode

The Netify agent can be installed on a gateway device: firewalls, routers, access points, aggregators, etc. This gateway mode provides a way to analyze what's on the network and control network traffic using the Netify Flow Actions plugin.

Mirror Port Mode

Sometimes referred to as a span port, mirror port mode allows you to connect a port to a standalone Netify DPI Agent if you have network switches with port mirroring capabilities. Tapping into the network in this mode allows one to analyze network traffic passively.

Network Interface Configlets

The /etc/netifyd/interfaces.d folder provides a place to drop network interface configuration for Netify DPI. There are several options available, but a minimal configuration requires the following:

- Interface name

- Capture driver

- Network role

sudo service netifyd reloadls -l /etc/netifyd/interfaces.d/

total 4

-rw-r--r-- 1 root root 437 Mar 4 22:31 10-lan.confinterfaces.d directory must begin with a two-digit numeric value, from 00 to 99 followed by a dash and end in '.conf' for

them to be parsed by the agent and included in the operating window on startup.A sample interface configuration file is shown below.

# Netify Agent Example Capture Interface Configuration

# Copyright (C) 2023 eGloo Incorporated

#

##############################################################################

# Example eth0 PCAP capture source

##############################################################################

[capture-interface-eth0]

capture_type = pcap

role = lan

address[0] = 10.0.0.0/24

address[1] = 172.16.100.0/24

The name of the configlet is for convenience/human consumption only. Inside the file, the ini style format organizes interface definitions by section.

Any number of interfaces can be defined in one file. The section name, preceded by capture-interface- tells the Netify agent the interface to

capture traffic on. For example, on a server with 5 network cards, 4 of which are being used to monitor mirror port

traffic, you might have something like this:

[capture-interface-eth0]

capture_type = pcap

role = lan

[capture-interface-eth1]

capture_type = pcap

role = lan

[capture-interface-eth2]

capture_type = pcap

role = lan

[capture-interface-eth3]

capture_type = pcap

role = lan

These interface names are generated by the networking stack on the Linux/BSD kernel. To display them, two different tools are generally used. Let's start

with the more modern iproute library using the command ip addr:

ip addr

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

..

.

2: wlp2s0: mtu 1500 qdisc noqueue state UP group default qlen 1000

..

.

3: enxd037457c9f29: mtu 1500 qdisc fq_codel state UP group default qlen 1000

..

. On the same system, but using the older net-tools command, ifconfig:

ifconfig

enxd037457c9f29: flags=4163 mtu 1500

inet 10.16.16.106 netmask 255.255.255.0 broadcast 10.16.16.255

..

.

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

..

.

wlp2s0: flags=4163 mtu 1500

inet 192.168.71.128 netmask 255.255.255.0 broadcast 192.168.71.255

..

. The interface names used by Netify's network interface configuration are the first non-numbered column...ex:

- lo

- wlp2s0

- enxd037457c9f29

- br-lan

- eth0

- em1

- igb0

- wg1

The role can be one of two values:

- lan

- wan

lan role should be used. This includes mirror port mode. When listening to both WAN and LAN traffic on

a gateway, flows that are being tracked in the connection tracking table are verified by the agent over Netlink and tagged with an internal flag. This

flag surfaces as the JSON attribute ip_nat and may modify the behavior of downstream handlers. For example,

Network Informatics will, by default, discard flows where ip_nat is set to

true. This removes duplicate flows based on the assumption that the LAN interface is seeing the same data, reducing the size of the data

storage and keeping statistics clean.

For environments where FreeBSD is the host operating system, a note about tracking NAT flows. BSD does not have an equivalent to connection tracking/Netlink. For this reason, on these platforms, we recommend listening only on LAN interfaces.

Netify's internal data structure uses a core networking concept of the two parties communicating, not as source and destination, but by:

- local

- other

- ip_address[x] Defining your local IP subnets helps Netify determine a local device. A device's IP falling into any of these subnets will be considered local.

- RFC 1918 (IANA Internal IP Blocks) A device will be identified if it is assigned an IP in the private IPv4 subnets of 10.0.0.0/8, 172.16.0.0/12, or 192.168.0.0/16.

- If both devices communicating to each other are identified as local with the above rules, the one with the lower IP assigned will be assigned local.

The capture can be one of three supported modes:

- pcap (PCAP)

- tpv3 (TPACKETv3)

- nfqueue (NFQUEUE)

PCAP

For most instances, using Netify's implementation of the Libpcap wrapper for packet capture provides the simplest path forward. It is natively supported in all Linux and FreeBSD platforms and architectures, has minimal configuration, and supports the use of Berkley Packet Filters (BPF).

TPACKETv3

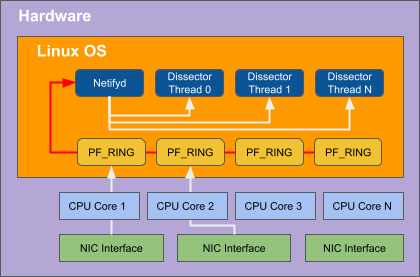

TPACKETv3 is a feature available in the Linux kernel's networking stack. Netify supports using this interface to capture and analyze flow data. It is especially useful for environments that require high-speed packet capture and processing and have both the processing and memory resources to do so.

By 'parallelizing' the process of capturing packets using multiple cores, the Netify Agent can perform at 10Gbps sustained traffic without the use of specialized network hardware.

A sample configlet using TPACKETv3 is provided below.

# Netify Agent Example Capture Interface Configuration

# Copyright (C) 2024 eGloo Incorporated

#

##############################################################################

[capture-interface-eth0]

capture_type = tpv3

role = lan

fanout_mode = hash

fanout_flags = defrag, rollover

fanout_instances = 2

rb_block_size = 1048576

rb_blocks = 64| Property | capture_type |

|---|---|

| Description | Select TPACKETv3 as the packet capture interface. |

| Type | string |

| Value | tpv3 |

| Property | role |

|---|---|

| Description | Select the interface mode. In general, you should typically only ever need to listen on LAN interfaces. |

| Type | string |

| Options | lan, wan |

| Property | fanout_mode |

|---|---|

| Description | The default mode is hash. In the AF_PACKET fanout mode, packet reception can be load-balanced among processes. This also works in combination with mmap on packet sockets. Currently implemented fanout policies are:

|

| Type | string |

| Options | hash, lb, cpu, rollover, random, qm |

| Property | fanout_flags |

|---|---|

| Description | To preserve order, enable defrag - causes packets to be de-fragmented before fanout is applied. Adding rollover option causes fanout to select next available buffer if the preferred buffer is full. One or both options are supported, simultaneously. |

| Type | string |

| Options | defrag, rollover |

| Property | fanout_instances |

|---|---|

| Description | Number of threads to fanout. |

| Type | integer |

| Default | 1 |

| Property | rb_block_size |

|---|---|

| Description | Ring buffer block size. Increasing block size decreases the chance of dropped packets at the expense of consuming more system memory. |

| Type | integer |

| Default | 4Mib |

| Property | rb_blocks |

|---|---|

| Description | Number of ring buffer blocks. Multiplied by the ring buffer size, this dictates total amount of memory required to operate the ring buffer. |

| Type | integer |

| Default | 64 |

NFQUEUE

NFQUEUE can be used to efficiently and specifically manage which packets enter the DPI engine and which do not. By default, Netify's NFQUEUE implementation always says the verdict to accept.

This capture method supports multi-threading, allowing the division of labor across multiple cores.

| Property | queue_instances |

|---|---|

| Description | Number of queue worker threads. |

| Type | integer |

| Default | 1 |

Berkley Packet Filters (BPF)

Berkley Packet Filters can be a useful tool to restrict the type of flows that are analyzed by the Netify DPI agent. A good resource on creating BPF filters can be found here.

To add a filter rule to be applied to Netify, add the filter directive to the section that should have a filter applied. Example:

[capture-interface-eth0]

capture_type = pcap

role = lan

filter = dst port 80 or dst port 443